iSpLib: A library for accelerating graph neural networks using auto-tuned sparse operations

Background

Training and inference in Graph Neural Networks (GNNs) often rely on sparse matrix operations, such as sparse-dense matrix multiplication (SpMM). These operations are challenging to optimize manually due to their dependence on the sparsity patterns of input graphs, the architecture of GNN models, and the characteristics of the underlying hardware. Existing frameworks like PyTorch and PyTorch Geometric provide general implementations, but they often fail to fully exploit hardware capabilities or the specific sparsity patterns in GNN workloads, leading to suboptimal performance.

Methodology

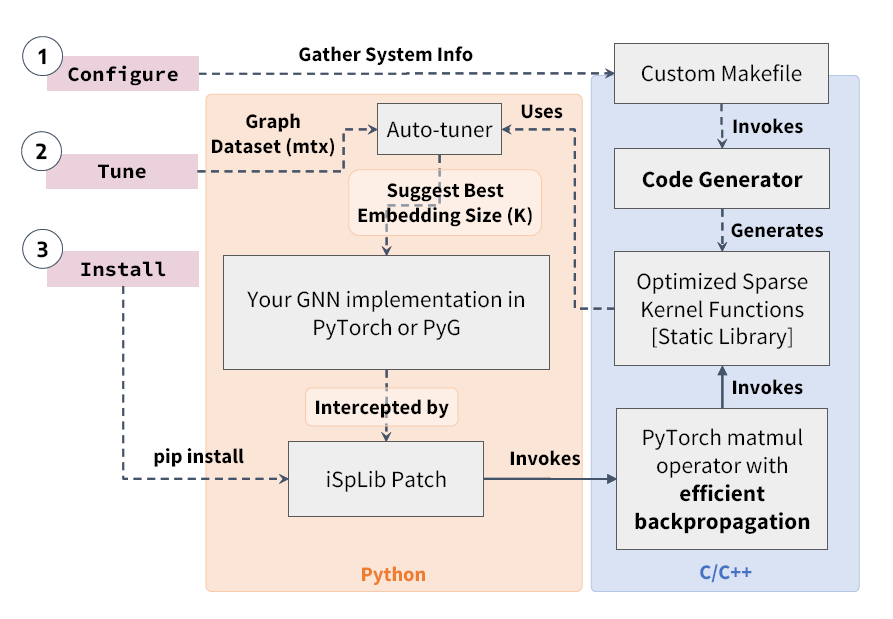

To address these challenges, we introduce iSpLib, a PyTorch-based C++ library that provides auto-tuned sparse operations specifically designed to accelerate GNN training. Key features of iSpLib include:

Cache-enabled backpropagation: Intermediate matrices are stored in local caches during training, reducing redundant computations and improving efficiency.

Python plug-in interface: Users can easily integrate iSpLib’s optimized sparse operations into any existing GNN model, including Graph Convolution Networks (GCNs), GraphSAGE, or Graph Inference Networks, with only two additional lines of code.

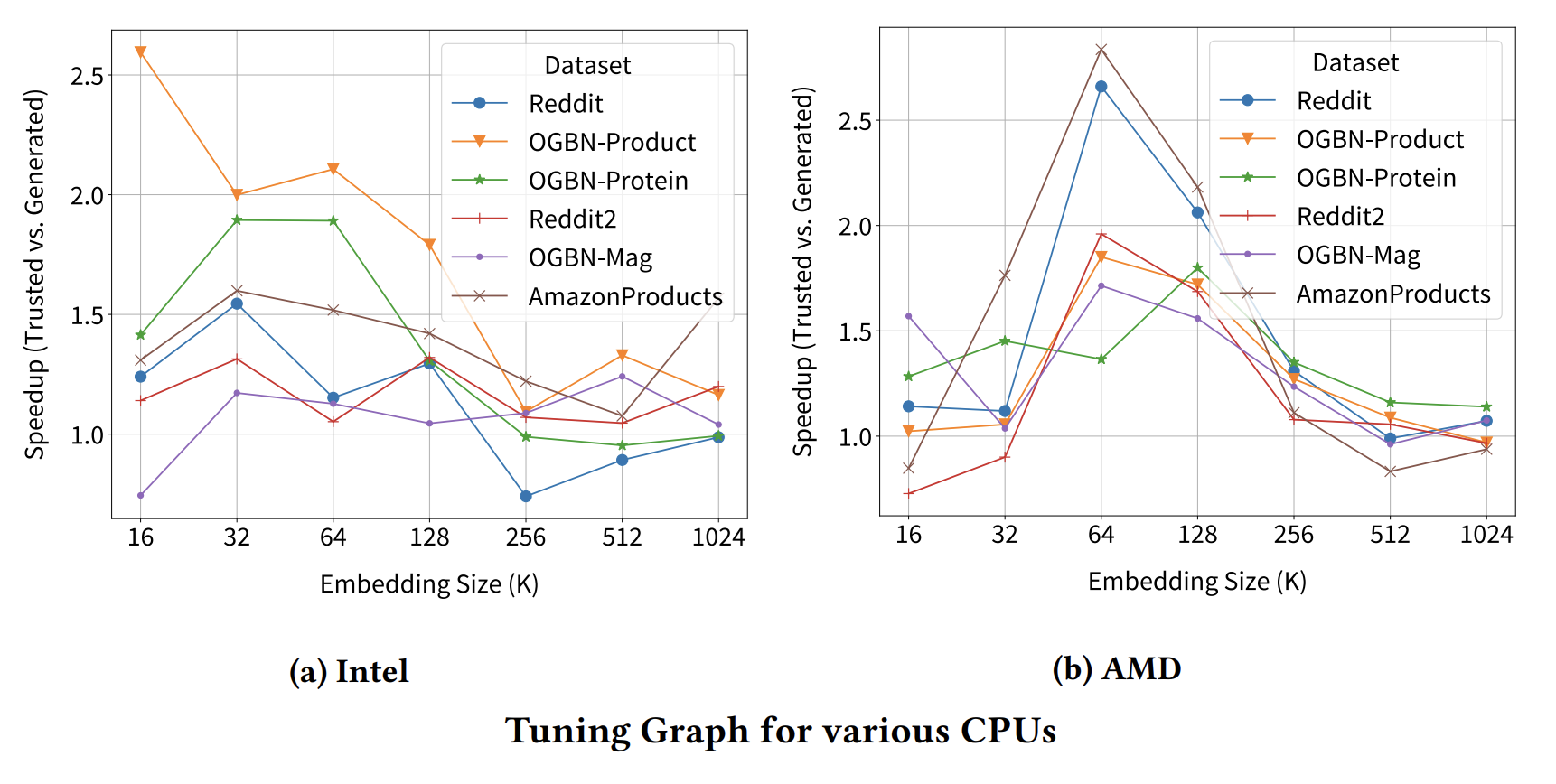

Auto-tuning mechanisms: Sparse operations are automatically tuned based on the input graph structure, GNN model, and hardware characteristics, minimizing manual optimization effort.

Findings

Experimental evaluations demonstrate that iSpLib provides substantial performance improvements over standard implementations. Specifically, training GNNs with iSpLib achieves up to 27× speedup on CPU compared to PyTorch 2.1.0 and PyTorch Geometric 2.4.